I’m embarrassed to admit this, but one thing that I’ve been struggling with lately is finding my glasses on my desk at work. I set them down and suddenly they’re nowhere to be found. I’ve even occasionally had to ask another person to come and help me find them. Why is this so challenging? Even if we set aside a couple of obvious problems — the fact that my desk is a mess, and that the world looks a little blurrier without my glasses – this process of visual search, in which we look for relevant information in our environment, is constrained by our visual attention.

These limitations arise from the fact that we first process visual information by its basic features – things like color or orientation (e.g., the color red). However, in order to register combinations of these different features together (for example, an object as being both red and horizontal), we need to direct our attention to the appropriate spatial location. This becomes important when we search for relevant information in our environment. In these cases, we prioritize information based on the features that we’re looking for (e.g., we’d pay attention to all of the red items and ignore the purple ones). In other words, these features guide our attention.

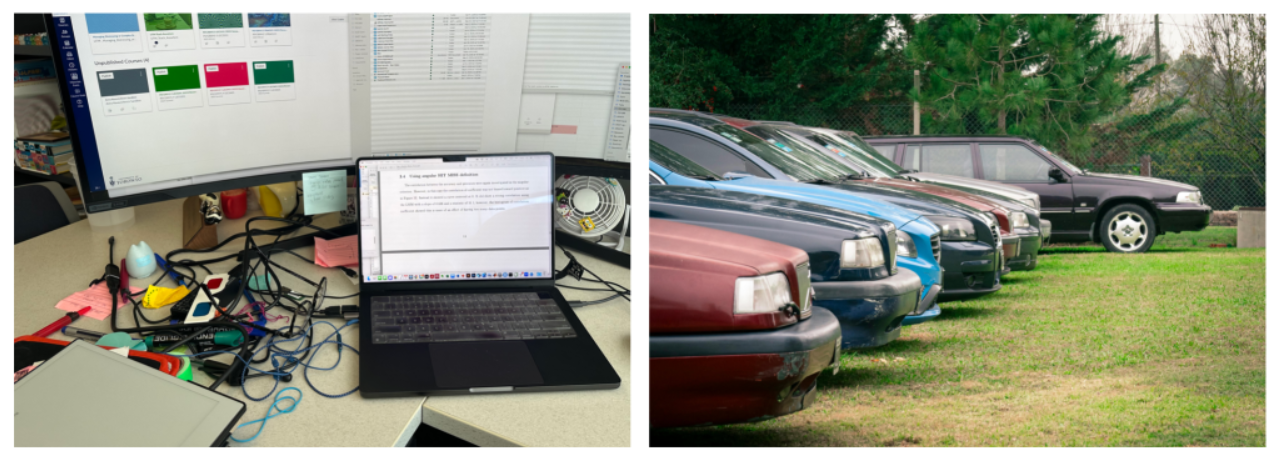

If you consider the couple of examples below, my glasses are difficult to find in the image on the left, in part because you’re looking for thin dark-colored lines, surrounded by other dark-colored things with similar outlines. In contrast, if I now ask you to look for the blue car in the image on the right, this takes very little time because it is the only blue thing in the scene.

These are classic ideas that underlie Feature Integration Theory (FIT) and Guided Search (GS), and research over the last few decades has been devoted to understanding how these attentional processes operate with simple geometric shapes. But can we apply the same principles to more complex features, like the kinds of features that we encounter in everyday scenes? Imagine for a moment that you’re looking at a set of different scene images (for example, browsing a catalog or scrolling through real estate listings). These images might vary in their higher-level attributes, like their lighting or the layout. Do these complex properties guide your attention in the same way? These are the questions that Gaeun Son, Michael Mack, and Dirk Bernhardt-Walther (pictured below) investigated in a recent paper in the Psychonomic Society journal Attention, Perception, and Psychophysics.

To understand this, let’s first consider how visual attention to simple features is measured in classic laboratory tasks. Have a look at the image below on the left. If you’re looking for the tilted grey bar among black vertical bars, you’re going to be very fast, and your response time is going to stay pretty much the same regardless of how many black vertical segments you add. This item (a singleton) can be found without needing to individually attend to each item in the display. Its distinguishing features (color and orientation) are said to be available preattentively. This is a little more difficult in the middle panel because there are now two types of distractors, but this can still be found very efficiently. However, if you consider the example on the far right, it takes some time to find the gray tilted bar because you need to look for a specific conjunction of features – a specific color and orientation (in this case, the gray and tilted bar) to tell it apart. To do this, you need to attend to the items individually, and the time it takes you to find it will increase with the number of items in the display.

How do these basic principles of visual attention apply to scene images? To examine this, the authors used AI-generated scene images that were created with the StyleGAN model. This model allowed them to tightly control high-level scene features to test whether they are available preattentively, much like color or orientation. In one experiment, they varied two types of features simultaneously: the lighting and the layout. If you look at the grid of images below (upper panel), you can see that the indoor lighting changes as you go from left to right, and that the layout shifts as you go from top to bottom. The authors used these to create different types of search displays, to parallel those used with simple geometric shapes shown above. Participants looked for specific scene images and indicated whether they were in the display or not.

The authors predicted that if these features guide visual attention, much like the simpler features we saw earlier, we should see similar patterns of response times. So what did these results look like?

In the graphs below, you can see that response times increase as you increase the number of items in the display, even when looking for the singleton. This suggests that these types of complex scene features (lighting and layout) are not available preattentively. Instead, we need to individually attend to each item to figure out if it matches the target. Interestingly, however, there are some differences in the slopes between these conditions. We see that slopes were steepest when participants looked for a combination of features (for example, daylight and with the room angled to the left), and shallower when looking for an individual feature (e.g., daylight). This matches up well with what we saw with those simple geometric displays.

What does this all mean? According to the authors, “Even though complex features of a scene—like how a room is lit or arranged—don’t pop out to us instantly, our attention is still guided by them in meaningful, bundled ways, much like how classic attention theories explain visual search.” So, regardless of whether you’re looking for something specific, a catalog full of images, or helping me find my glasses on my desk, you still use information from certain features to make your search more efficient. If you’re looking for a dimly lit scene, you can constrain your search usefully by ignoring the daylight scenes, just like you would ignore the brightly colored figurine when looking for my glasses. These features guide our attention as we look for the information around us, even in the kinds of complex scenes we encounter in daily life.

Psychonomic Society article featured in this post

Son, G., Mack, M. L., & Walther, D. B. (2025). Attention to complex scene features. Attention, Perception, & Psychophysics, 1-23. https://doi.org/10.3758/s13414-025-03081-y

About the author

Anna Kosovicheva is an Assistant Professor in the Department of Psychology at the University of Toronto, Mississauga. Her research focuses on visual localization and spatial and binocular vision, with an emphasis on the application of vision research to real-world problems.

This article was originally published by the Psychonomic Society as part of their Featured Content series.